I share Blanchard’s vision that “The pursuit of a widely accepted analytical macroeconomic core, in which to locate discussions and extensions, may be a pipe dream, but it is a dream surely worth pursuing”. But he—and Neoclassical economics in general—err with the false belief that “Starting from explicit microfoundations is clearly essential; where else to start from?” (Blanchard 2016, p. 3). The answer to Blanchard’s purportedly rhetorical question is that the proper foundation of macroeconomics is not microeconomic theory, but macroeconomics itself.

This is chapter six of my draft book Rebuilding Economics from the Top Down, which will be published later this year by the Budapest Centre for Long-Term Sustainability

The previous chapter was I’M NOT DISCREET, AND NEITHER IS TIME, which is published here on Patreon and here on Substack.

If you like my work, please consider becoming a paid subscriber from as little as $10 a year on Patreon, or $5 a month on Substack

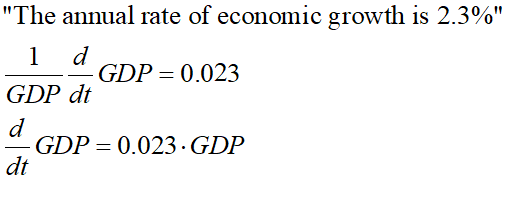

This may sound paradoxical: how can your foundations be what you are trying to build on those foundations? But in fact, macroeconomic definitions which all economists must accept—simply because they are both true by definition, and essential to the study of the macroeconomy—can easily be turned into dynamic statements that enable the development of a realistic macroeconomic dynamics.

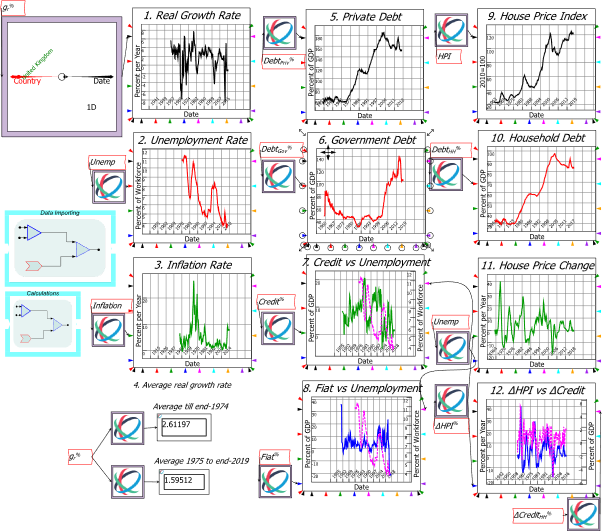

This process of building macroeconomics from macroeconomic definitions yields simple models which fit the data with far less use of arbitrary parameters than Neoclassicals impose on their “microfounded” models—and no use at all of carefully calibrated “exogenous shocks”—and which can be easily extended and made more realistic by adding further definitions (Keen 2020).

Since this chapter—and the models in it—is necessarily complex, I’ll start with its key takeaways. Working directly from incontestably true macroeconomic definitions, it is obvious that:

- Capitalism is an inherently cyclical system (rather than an equilibrium system);

- It is liable to collapse into a debt-deflation; but

- It can be stabilized by counter-cyclical government spending.

These conclusions are the opposite of the a priori biases of Neoclassical microeconomics. And, these results are derived from definitions that all economists must accept, which are turned into dynamic models using empirically realistic simplifying assumptions. The contrary Neoclassical beliefs that capitalism tends towards equilibrium, that debt-deflations are impossible given the (as usual, false) assumptions of the Loanable Funds model of banking, and that government intervention almost always makes the social welfare outcome worse, are based on foundations that are rotten, both intellectually and empirically.

-

Inherent Complexity and Cyclicality

Though, as noted in Chapter 3, three dimensions are needed to generate a fully complex system, two fundamental definitions are sufficient to demonstrate how different this approach is to Neoclassical modelling—and how realistic it is as well. These two definitions are the employment rate, and wages share of GDP: the former characterises the level of economic activity, and the latter the distribution of income.

The employment rate is how many people are employed, divided by the population; the wages share of GDP is the total wage bill, divided by GDP. Using for the employment rate, L for employment, N for population (because I’ll later use P for Prices), for the wages share of GDP, W for total wages, and Y for GDP, the starting definitions for a genuinely well-founded macroeconomics are:

and

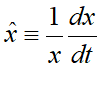

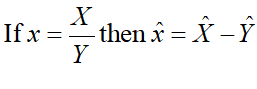

Applying the rules discussed in the previous chapter to Equation yields:

The same operation applied to Equation yields:

These two equations make two extremely simple, and obviously true, dynamic statements:

- The employment rate will rise if employment rises faster than population; and

- The wages share of GDP will rise if total wages rise faster than GDP.

Deriving a dynamic model from these true-by-definition statements is a straightforward task that I have put in a later section, so that I can focus here on the essential point that a realistic and inherently cyclical macroeconomic model can be derived directly from macroeconomic definitions which are beyond dispute.

The model derived from these two definitions is shown in Equation :

Developing this model required the introduction of several parameters, and their names were chosen so as to make interpreting their meaning relatively easy: KYr is the Capital to Output ratio, for example. The names, meanings and values of of all the parameters in this model are given in Table 3.

Table 3: Parameters in the models

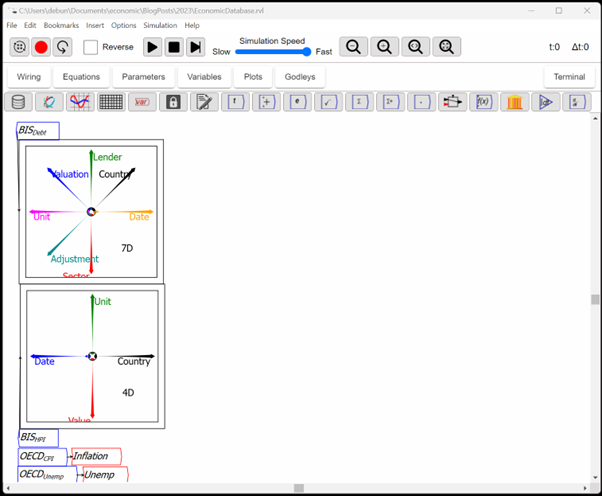

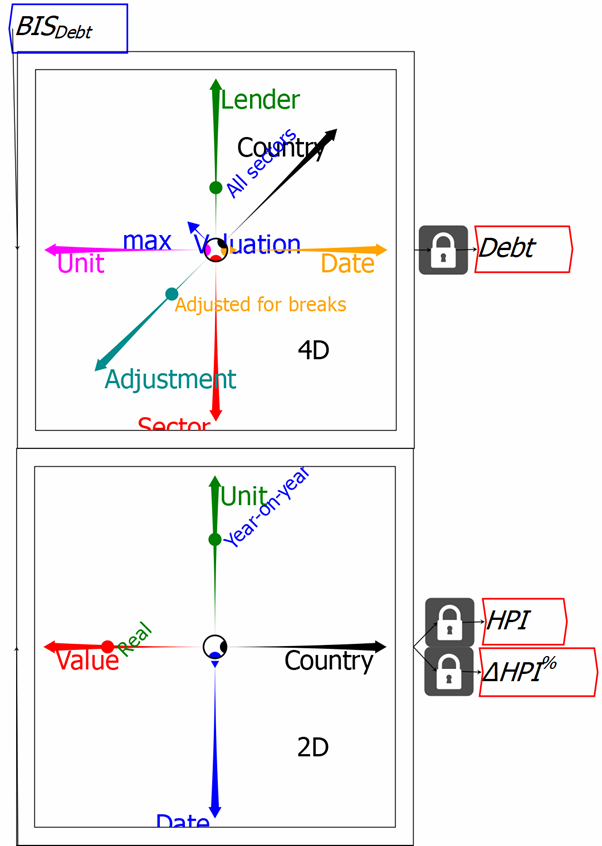

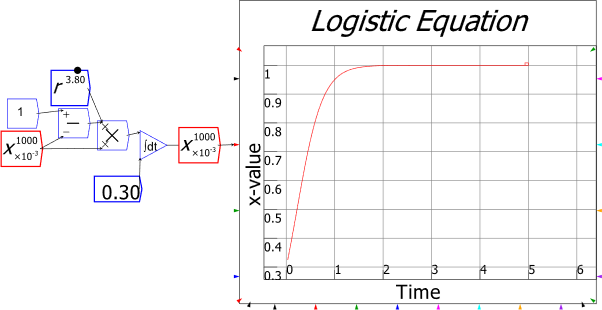

There’s are many programs that can simulate this model, from mathematical systems like Mathematica and Maple, to system dynamics programs like Vensim … and Minsky. I use Minsky because (a) I invented it; (b) it’s free; and (c) it’s the only program designed to model the dynamics of money, which becomes critically important in subsequent chapters.

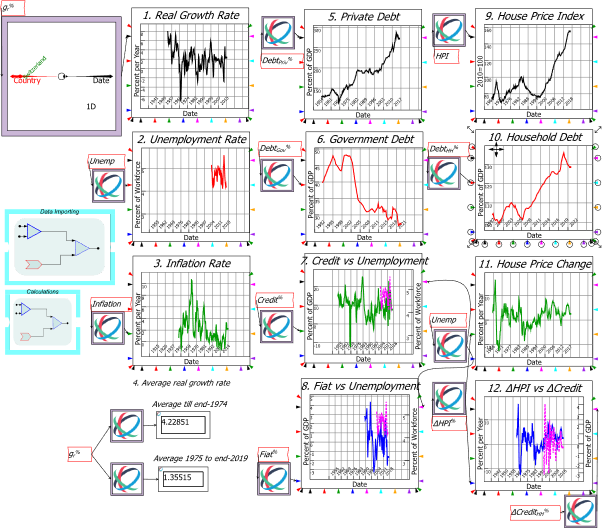

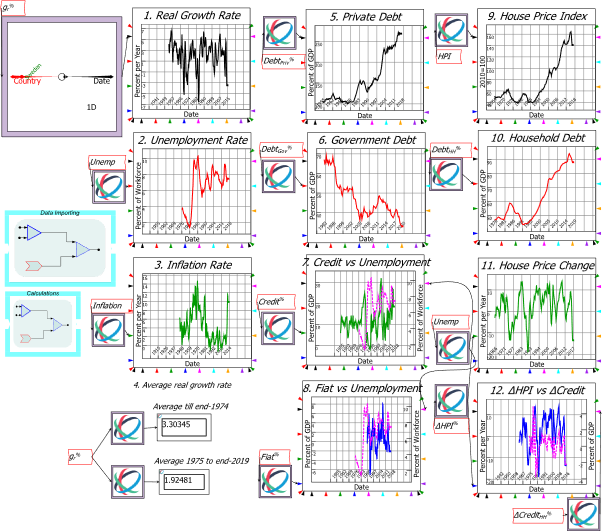

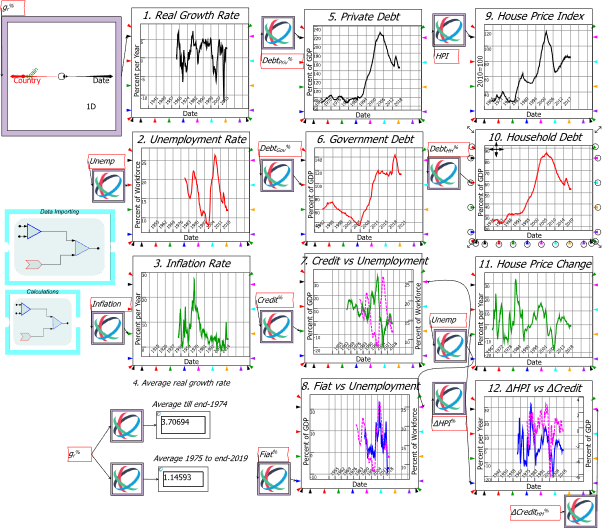

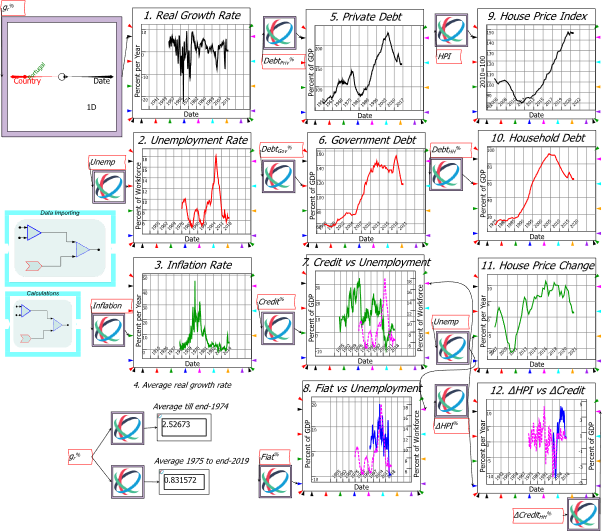

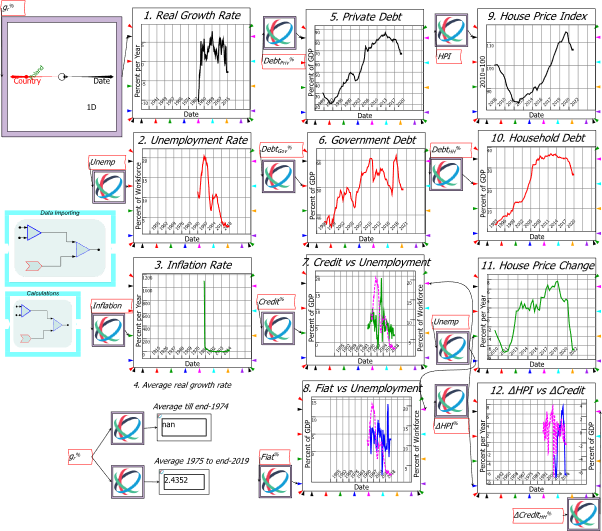

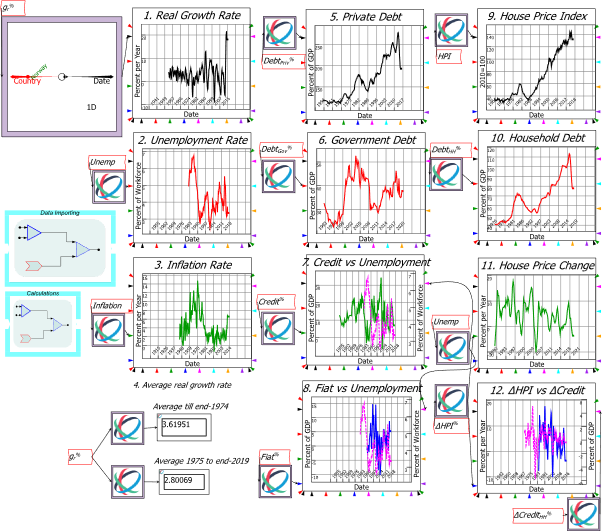

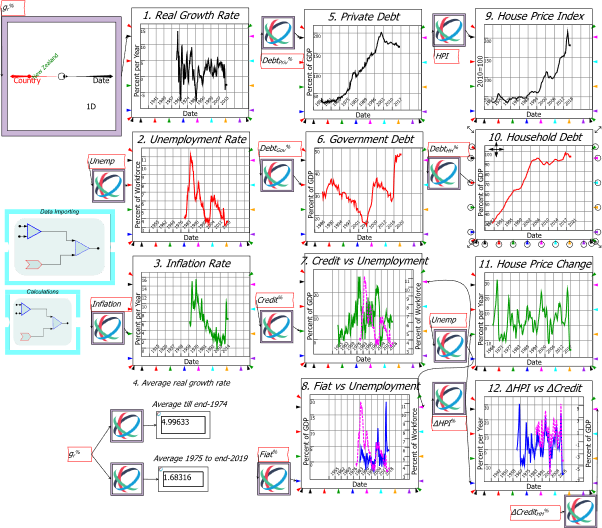

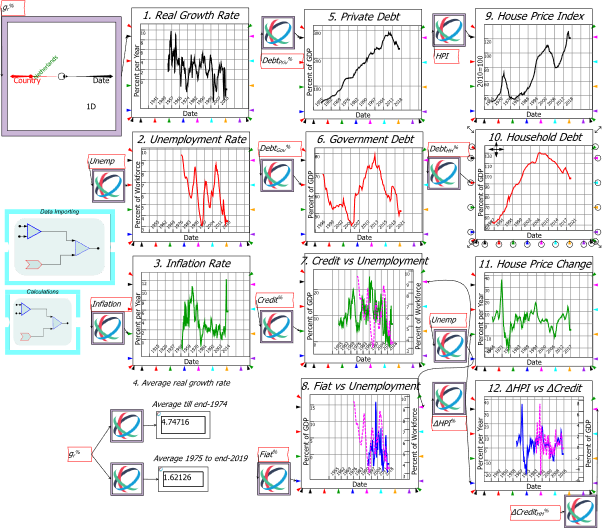

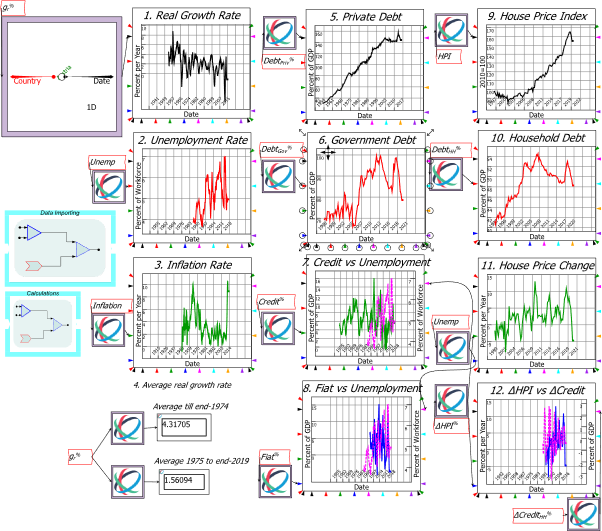

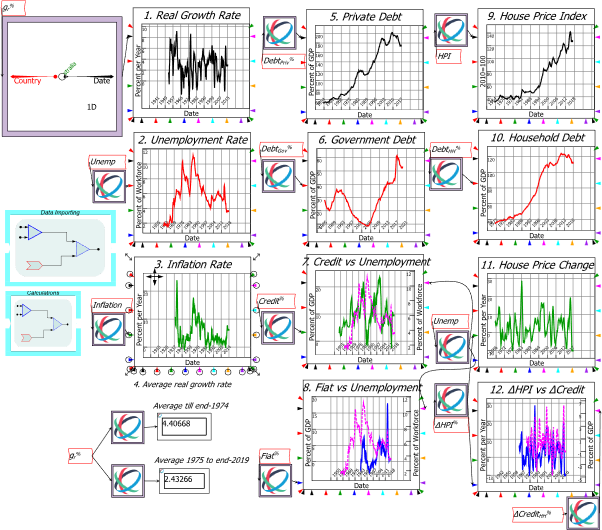

The model is inherently cyclical, as Figure 13 illustrates.

Figure 13: Inherent and endemic cycles in a definitions-based dynamic macroeconomic model

Capitalism is therefore at its core a cyclical, rather than an equilibrium system. The Neoclassical portrayal of capitalism as a system that always returns to equilibrium after a disturbance is both a relic of the 19th century belief that equilibrium was an unfortunate but necessary assumption needed to enable modelling (Jevons 1888, p. 93—a belief which ceased to be valid in the mid-20th century), and a characteristic that Neoclassicals artificially impose on their RBC and DSGE models, because they have elevated equilibrium from a modelling compromise into a critical component of their vision of capitalism as a welfare-maximizing system—which it isn’t.

Given that this model can be derived directly from macroeconomic definitions, with none of the arbitrary assumptions that characterized Ramsey’s derivation of his growth model—let alone the crazy assumptions added by later Neoclassicals to apply Ramsey’s model to the macroeconomy (Solow 2010, p. 13)—this model should be regarded as half of the foundational model of macroeconomics—half because it does not yet include the financial sector or the government, which I add in the next two sections.

This model is, in fact, Richard Goodwin’s “growth cycle” model, which he developed in 1965 (Goodwin 1966; Goodwin 1967). My sole contribution here is to show that, rather than being based on “ad-hoc” equations, Goodwin’s model can be directly and easily derived from strictly true macroeconomic definitions, and straightforward simplifying assumptions.

Goodwin’s model has been neglected in economics, largely because Neoclassical economists abhor non-equilibrium systems, but also because of an unfortunate paper—for which I must confess that I was one of the referees who recommended its publication—which incorrectly derided its empirical accuracy. Entitled “Testing Goodwin: Growth Cycles in Ten OECD Countries”, it concluded that “At a quantitative level, Goodwin’s … estimated parameter values poorly predict the cycles’ centres” (Harvie 2000, p. 359).

In fact, this conclusion was due to a mistake by Harvie that he later frankly described to me as a “typical schoolboy error”: he used numbers in percentages, when his work had been done in fractions. That put his numbers out by a factor of 100—a fact that I only discovered when I attempted to use his parameters in a model. This mistake was corrected by (Grasselli and Maheshwari 2017), who found that the properly calibrated model was consistent with the data for OECD countries.

Figure 14 illustrates this with respect to US data from 1948 till 1968: using historically reasonable parameter values, the equilibrium of the model in Figure 13 precisely reproduces the average value for the employment rate and wages share between 1948 and 1968, even though the model is still very incomplete., The model also reproduces the cyclicality of the empirical data—something that a Neoclassical model cannot do without adding (carefully calibrated!) “exogenous shocks”—though not the actual magnitude of those cycles.

Figure 14: USA Employment and Wages Share Dynamics from 1948 till 1968

The next section completes this as a model of a pure capitalist economy by introducing the financial sector, in the form of the private debt to GDP ratio (in a later chapter, I explain why private debt is an essential component of the foundational model of macroeconomics).

-

Debt-Deflation in a Pure Credit Economy

The debt ratio dr is the level of private debt (DP) divided by GDP:

In dynamic form, this definition is:

As usual, this equation has a straightforward verbal interpretation: the private debt ratio will rise if private debt grows faster than GDP.

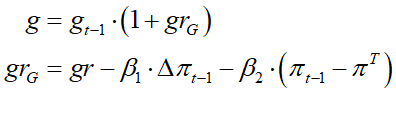

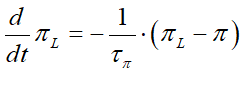

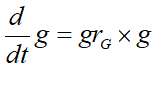

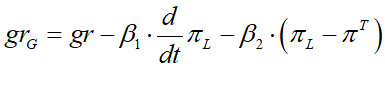

Several modifications are required to variables in the previous model to integrate private debt dynamics into it. Debt means interest payments, so the rate of interest r was added as a parameter (it can be a variable in more elaborate models); profit is now net of interest payments as well as of wages; and Goodwin’s extreme assumption that capitalists invest all their profits is replaced by an investment function iG, (based on the rate of profit r) which has the same form as the wage change function in the previous model, and which assumes—rather too generously—that all debt is used to finance productive investment. As explained in the Section 7.6, this results in the following 3-equation system:

Here gr stands for the growth rate; w stands for the wage change function; iG stands for gross investment (investment before depreciation) and is a function of the rate of profit; and the profit share s is introduced, since it plays a significant—and surprising—role in the dynamics of the model.

The parameters for the investment function, and the interest rate, are shown in Table 4.

Table 4: Parameters added to the Goodwin model to include private debt

#With this model, “we’re not in Kansas anymore”,

when compared to the Neoclassical view of reality. The model can reach equilibrium, but most likely it will not. It will appear to be heading for equilibrium, only to cycle away from it (Pomeau and Manneville 1980). The people who don’t borrow in this simple model—workers—are the ones who pay the cost of borrowing, via a lower share of national income. The people who do borrow—capitalists—don’t pay the cost, but they are also the last ones to realise that, when the system is unstable, it is headed for a breakdown that will bankrupt them. Finally, the direct beneficiaries of rising private debt—bankers—end up owning everything of nothing.

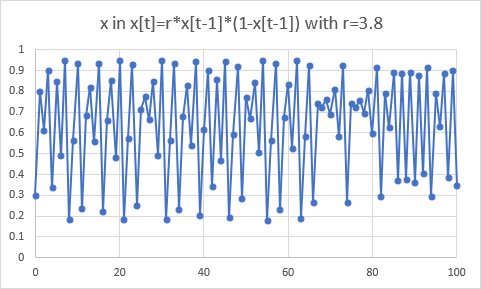

The technical reason for this much greater complexity of this model over Goodwin’s is the fact that a model needs three dimensions in order to display complex behaviour—”Period Three Implies Chaos”, as Li and Yorke put it (Li and Yorke 1975). Goodwin’s model, with just two dimensions (the employment rate and the wages share ), is constrained by the nature of differential equations to display a very limited range of dynamic behaviours. But when the third dimension of the private debt ratio dr is added, much more complex and realistic behaviours can be generated.

Figure 15 shows a run of the model that does converge to equilibrium (though very slowly: it takes a millennium for the cycles to become imperceptible).

Figure 15: The model with investment and capital to output parameters that lead to equilibrium

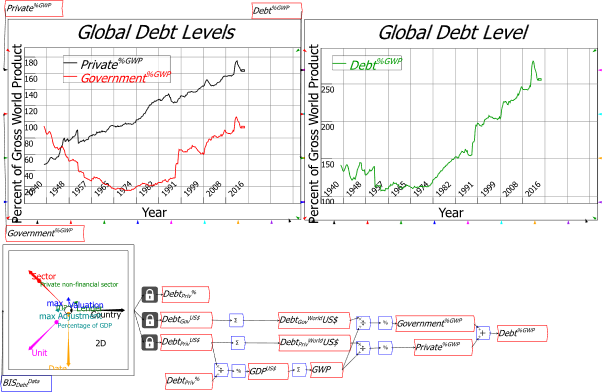

With the parameter values used in Figure 15, the new system-state in this model—the level of private debt—has an equilibrium which is comparable to the level of the 1950s. But as Figure 16 illustrates, this was no equilibrium: the ratio rose substantially, and normally constantly, until hitting a peak of 170% of GDP in 2008.

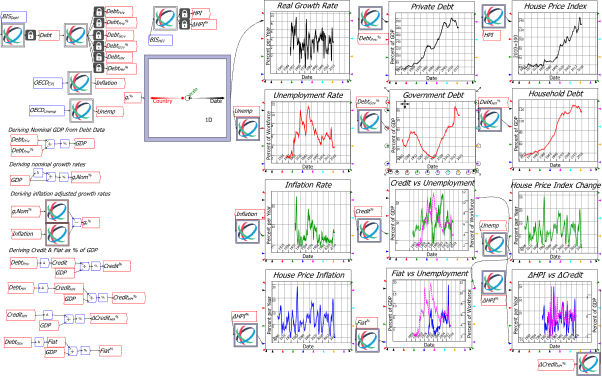

Figure 16: USA Private Debt level since WWII (https://www.bis.org/statistics/full_data_sets.htm)

If we choose parameter values for the model that generate this peak level of private debt as an equilibrium—by changing the slope of the investment function from 5 to 5.86—then we get an entirely different class of dynamics from this model. What Costa-Lima and Grasselli characterized as the “good equilibrium” of this model (Costa Lima, Grasselli, Wang, and Wu 2014, p. 35) becomes an unstable “strange attractor”, while the “bad equilibrium”—of a zero level of employment, a zero wages share, and an infinite debt ratio—becomes a stable attractor. If run for long enough, the model eventually collapses into zero wages share, zero employment, and an infinite debt ratio.

One emergent property of this model is that, with parameter values that lead to a private debt crisis, the volatility of the model declines prior to the crisis, in a manner which was replicated by the real world in the “Great Moderation” that preceded the “Great Recession” of 2007. This phenomenon is more obvious with nonlinear behavioural functions, which are applied in the model shown in Figure 18. The nonlinear functions shown in Figure 17 are generalized exponentials. These give a consistent curvature compared to a linear function, and rule out anomalies like negative investment. I’ve used linear functions for workers’ wage demands and capitalist investment decisions thus far, not because they’re more realistic—far from it—but because their use confirms that the cyclical behaviour of the models is driven, not by assumptions imposed by the modeller, but by the structure of the economy itself.

Figure 17: Nonlinear versus linear functions for wage change, investment and government spending change

With these functions and parameter values, the pure credit model undergoes a debt-induced crisis—see Figure 18.

Figure 18: A Private-Debt-induced Crisis with a nonlinear investment function

This still very simple and stylized model has an important real-world implication, which I noted in the conclusion to my first paper on this topic, “Finance and Economic Breakdown: Modelling Minsky’s Financial Instability Hypothesis” (Keen 1995): a period of tranquillity in a capitalist economy is not inherently a good thing, but can in fact be a warning that a crisis is approaching:

From the perspective of economic theory and policy, this vision of a capitalist economy with finance requires us to go beyond that habit of mind that Keynes described so well, the excessive reliance on the (stable) recent past as a guide to the future. The chaotic dynamics explored in this paper should warn us against accepting a period of relative tranquility in a capitalist economy as anything other than a lull before the storm. (Keen 1995, p. 634. Emphasis added)

In contrast, equilibrium-obsessed Neoclassical economists saw the “Great Moderation” as a “welcome change to the economy” (Bernanke 2004), and actually attributed it to their successful management of the economy:

The sources of the Great Moderation remain somewhat controversial, but as I have argued elsewhere, there is evidence for the view that improved control of inflation has contributed in important measure to this welcome change in the economy. (Bernanke 2004)

The other emergent property of the model is equally striking: though firms (capitalists) are the ones doing the borrowing in this model, it is the workers who pay for rising debt via a declining workers’ share of income. Profits cycle around the equilibrium level until the crisis, while workers’ incomes decline as a direct effect of the rising income share going to banks.

This model is, of course, a mathematical rendition of Hyman Minsky’s “Financial Instability Hypothesis” (Minsky 1975, 1982). Though other specialists on Minsky emphasise his classification of finance into Hedge, Speculative and Ponzi Finance, and the change in the relative proportions of these financial archetypes through the business cycle, my favourite expression of the FIH as a dynamic process is the following from “The Financial Instability Hypothesis: An Interpretation of Keynes and an Alternative to ‘Standard’ Theory”:

The natural starting place for analyzing the relation between debt and income is to take an economy with a cyclical past that is now doing well. The inherited debt reflects the history of the economy, which includes a period in the not-too-distant past in which the economy did not do well. Acceptable liability structures are based upon some margin of safety so that expected cash flows, even in periods when the economy is not doing well, will cover contractual debt payments.

As the period over which the economy does well lengthens, two things become evident in board rooms. Existing debts are easily validated and units that were heavily in debt prospered; it paid to lever. After the event it becomes apparent that the margins of safety built into debt structures were too great. As a result, over a period in which the economy does well, views about acceptable debt structure change. In the deal-making that goes on between banks, investment bankers, and businessmen, the acceptable amount of debt to use in financing various types of activity and positions increases. This increase in the weight of debt financing raises the market price of capital assets and increases investment. As this continues the economy is transformed into a boom economy…

It follows that the fundamental instability of a capitalist economy is upward. The tendency to transform doing well into a speculative investment boom is the basic instability in a capitalist economy. (Minsky 1977, pp. 12-13; 1982, pp. 66-67. Emphasis added)

Minsky’s genius was his capacity to see this fundamental instability of capitalism, free of the hobbling Neoclassical assumption of equilibrium, and to relate this to the level of private debt—an insight he garnered from Fisher (Fisher 1933) rather than from Keynes, whom he didn’t properly appreciate until he read the brief essay “The General Theory of Employment” (Keynes 1937) in 1968 (Minsky 1969a, p. 9, footnote 6; 1969b, p. 225).

We now have three-quarters of a foundational dynamic model of capitalism. The final element needed to complete this model (prior to the introduction of prices) is a government sector.

-

Stabilising an Unstable Economy

A government sector is added by applying Kalecki’s insight that net government spending adds to profits. Kalecki began with Table 5, which shows GDP in terms of both income (the left-hand column) and expenditure:

Table 5:Kalecki’s basic Income and Expenditure Identity (Kalecki 1954, p. 45)

Assuming that workers spend all of their incomes, Kalecki equated Gross Profits to the sum of Gross Investment and Capitalists’ Consumption, and then asked the causal question: which comes first? His answer was that:

it is clear that capitalists may decide to consume and to invest more in a given period than in the preceding one, but they cannot decide to earn more. It is, therefore, their investment and consumption decisions which determine profits, and not vice versa. (Kalecki 1954, p. 46. Emphasis added)

Kalecki then included government expenditure and taxes and exports, and allowed for some saving by workers. Simplifying his final equation somewhat, this led to the relationship that:

The model in this section follows Kalecki (Kalecki 1954, p. 49) by redefining profit to include net government spending (spending in excess of taxation), adds an equation for the rate of change of net government spending as a function of the level of unemployment, and a differential equation for government net spending:

Figure 19 simulates this system with nonlinear behavioural functions and the same system parameters as the unstable private-sector-only model in Figure 17, to illustrate the result that, as Hyman Minsky argued, “big government virtually ensures that a great depression cannot happen again” (Minsky 1982, p. xxxii). However, the process is more complex—fittingly—than Minsky could envisage with purely verbal reasoning.

The definitive treatment of the dynamic and stability properties of this model is given by Costa-Lima, Grasselli et al. in “Destabilizing a stable crisis: Employment persistence and government intervention in macroeconomics” (Costa Lima, Grasselli, Wang, and Wu 2014), though their model was more complicated than the one shown here.

The obvious outcome that the model remains cyclical, but does not undergo a breakdown, is due to a characteristic of complex dynamic systems known as persistence. Given the commonality of this phenomenon in real-world systems, and the unfamiliarity of economists with tis concept, it is worth quoting their paper at length:

Persistence theory studies the long-term behaviour of dynamical systems, in particular the possibility that one or more variables remain bounded away from zero. Typical questions are, for example, which species in a model of interacting species will survive over the long-term, or whether it is the case that in an endemic model an infection cannot persist in a population due to the depletion of the susceptible population.

In our context, we are interested in establishing conditions in economic models that prevent one or more key economic variables, such as the employment rate, from vanishing… we prove … that under a variety of alternative mild conditions on government subsidies, the model describing the economy is uniformly weakly persistent with respect to the employment rate …

[W]e can guarantee that the employment rate does not remain indefinitely trapped at arbitrarily small values. This is in sharp contrast with what happens in the model without government intervention, where the employment rate is guaranteed to converge to zero and remain there forever if the initial conditions are in the basin of attraction of the bad equilibrium corresponding to infinite debt levels… no matter how disastrous the initial conditions are, a sufficiently responsive government can bring the economy back from a state of crises associated with zero employment rates…

On the other hand … austerity implies that the government cannot prevent the economy from remaining trapped in the basin of attraction of at least one of the bad equilibria, which is of course an undesirable outcome. (Costa Lima, Grasselli, Wang, and Wu 2014, pp. 31, 37. Emphasis added)

Figure 19: Net government spending stabilizes the previously unstable system

-

Conclusion

Though these models are still very simple, they generate a picture of the economy that is at once totally different to the Neoclassical fantasy of eternal (but sometimes exogenously shocked) equilibrium, and empirically much easier to fit to actual data. In the next Chapter I show that these models can also be developed by following the causal approach of system dynamics, which also make it easier to add further real-world complexities to the basic models. Blanchard’s dream of “a widely accepted analytical macroeconomic core, in which to locate discussions and extensions” is alive and well, but only if the dead-end of Neoclassical equilibrium modelling is abandoned.

Bellino, Enrico. 2013. ‘On the stability of the Ramsey accumulation path (MPRA Paper No. 44024).’ in Levrero S., Palumbo A. and Stirati A. (eds.), Sraffa and the Reconstruction of Economic Theory (Palgrave Macmillan: Houndmills, Basingstoke, Hampshire, UK, 2013. ).

Bernanke, Ben S. 2004. “Panel discussion: What Have We Learned Since October 1979?” In Conference on Reflections on Monetary Policy 25 Years after October 1979. St. Louis, Missouri: Federal Reserve Bank of St. Louis.

Blanchard, Olivier. 2016. ‘Do DSGE Models Have a Future? ‘, Peterson Institute for International Economics. https://www.piie.com/publications/policy-briefs/do-dsge-models-have-future.

Blatt, John M. 1983. Dynamic economic systems: a post-Keynesian approach (Routledge: New York).

Costa Lima, B., M. R. Grasselli, X. S. Wang, and J. Wu. 2014. ‘Destabilizing a stable crisis: Employment persistence and government intervention in macroeconomics’, Structural Change and Economic Dynamics, 30: 30-51.

Fisher, Irving. 1933. ‘The Debt-Deflation Theory of Great Depressions’, Econometrica, 1: 337-57.

Goodwin, R. M. 1966. ‘Cycles and Growth: A growth cycle’, Econometrica, 34: 46.

Goodwin, Richard M. 1967. ‘A growth cycle.’ in C. H. Feinstein (ed.), Socialism, Capitalism and Economic Growth (Cambridge University Press: Cambridge).

Grasselli, Matheus R., and Aditya Maheshwari. 2017. ‘A comment on ‘Testing Goodwin: growth cycles in ten OECD countries”, Cambridge Journal of Economics, 41: 1761-66.

Harvie, David. 2000. ‘Testing Goodwin: Growth Cycles in Ten OECD Countries’, Cambridge Journal of Economics, 24: 349-76.

Jevons, William Stanley. 1888. The Theory of Political Economy ( Library of Economics and Liberty: Internet).

Kalecki, M. 1954. Theory of Economic Dynamics: An Essay on Cyclical and Long-Run Changes in Capitalist Economy

(MacMillan: London).

Keen, Steve. 1995. ‘Finance and Economic Breakdown: Modeling Minsky’s ‘Financial Instability Hypothesis.”, Journal of Post Keynesian Economics, 17: 607-35.

———. 2020. ‘Emergent Macroeconomics: Deriving Minsky’s Financial Instability Hypothesis Directly from Macroeconomic Definitions’, Review of Political Economy, 32: 342-70.

Keynes, J. M. 1937. ‘The General Theory of Employment’, The Quarterly Journal of Economics, 51: 209-23.

Li, Tien-Yien, and James A. Yorke. 1975. ‘Period Three Implies Chaos’, The American Mathematical Monthly, 82: 985-92.

Minsky, Hyman P. 1969a. ‘The New Uses of Monetary Powers’, Nebraska Journal of Economics and Business, 8: 3-15.

———. 1969b. ‘Private Sector Asset Management and the Effectiveness of Monetary Policy: Theory and Practice’, Journal of Finance, 24: 223-38.

———. 1975. John Maynard Keynes (Columbia University Press: New York).

———. 1977. ‘The Financial Instability Hypothesis: An Interpretation of Keynes and an Alternative to ‘Standard’ Theory’, Nebraska Journal of Economics and Business, 16: 5-16.

———. 1982. Can “it” happen again? : essays on instability and finance (M.E. Sharpe: Armonk, N.Y.).

Pomeau, Yves, and Paul Manneville. 1980. ‘Intermittent transition to turbulence in dissipative dynamical systems’, Communications in Mathematical Physics, 74: 189-97.

Ramsey, F. P. 1928. ‘A Mathematical Theory of Saving’, The Economic Journal, 38: 543-59.

Solow, R. M. 2010. “Building a Science of Economics for the Real World.” In House Committee on Science and Technology Subcommittee on Investigations and Oversight. Washington.

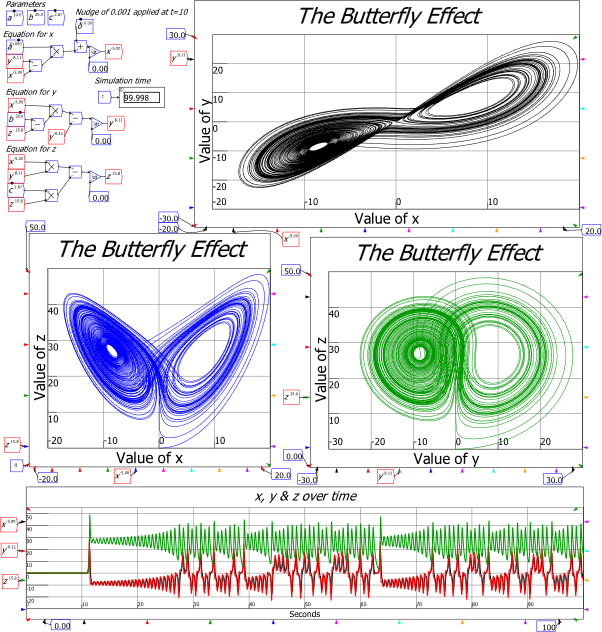

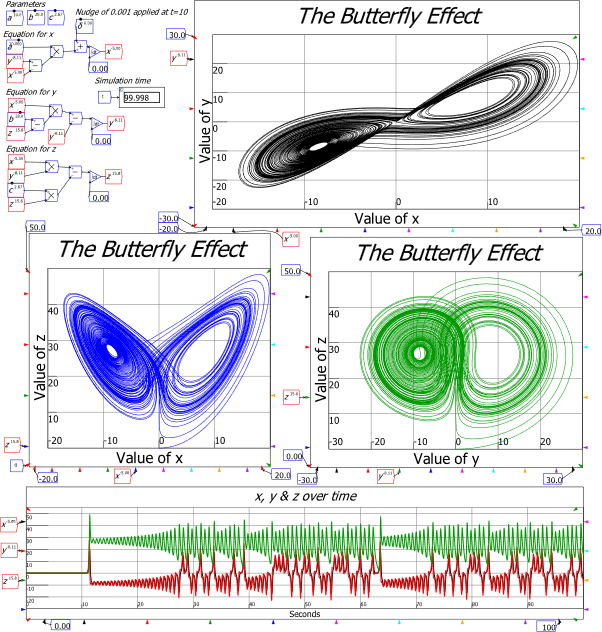

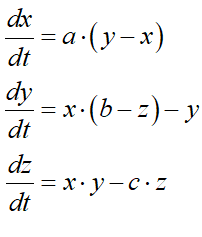

, which were the initial condition of the simulation shown in Figure 4. I then nudged the x-value 0.001 away from its equilibrium at the ten second mark. After this disturbance, the system was propelled away from this unstable equilibrium towards the other two—the “eyes” in the three phase plots. These equilibria are “strange attractors”, which means that they describe regions that the system will never reach—even though they are also equilibria of the system.

, which were the initial condition of the simulation shown in Figure 4. I then nudged the x-value 0.001 away from its equilibrium at the ten second mark. After this disturbance, the system was propelled away from this unstable equilibrium towards the other two—the “eyes” in the three phase plots. These equilibria are “strange attractors”, which means that they describe regions that the system will never reach—even though they are also equilibria of the system.